Automating Azure SQL Database backups using docker and GitLab

Everybody wants repeatable tests once in a while, and these tests should use external dependencies with predictable behaviors and values. In this case, our team at Indimin wanted to test our product’s microservices APIs with up-to-date database schema and test data. This data is the same that we use in our QA environment, hosted on Azure as an SQL Database Instance, so we use this database as our inner test database.

I work on ubuntu, so the code snippets refer to a

bashterminal.

Requirements

- Backup database schema and data (only one database)

- These backups should live inside a container (only use Microsoft’s SQL Server 2019 base image).

- We need to continuously make backups, hopefully without human intervention.

- Using our self-managed GitLab instance, there should be a simple-to-use place where we can schedule different database backups on low traffic hours without modifying the automation process.

Let’s address these requirements one by one.

Backup and restore

We need a way to backup schema and data from an Azure SQL Database to our local file system. Luckily, Microsoft offers a tool for this. The sqlpackage

allows us to export and import database schema and data using the bacpac file format.

To backup using a file as a target, we run:

1

2

3

4

5

6

sqlpackage /a:Export \

/ssn:tcp:server_name.database.windows.net,1433 \

/su:sa \

/sp:str()ng!password \

/sdn:database_name \

/tf:backup/backup.bacpac

This generates the following directory structure:

1

2

3

4

5

6

7

8

9

10

11

12

$ ls -lh

drwxr-xr-x 3 root root 4.0K May 12 16:44 IsolatedStorage

-rw-r--r-- 1 root root 2.5M May 12 16:44 backup.bacpac

$ tree . | head

|-- IsolatedStorage

| `-- ulyru5ls.gn3

| `-- i0y5vzn2.ptb

| `-- StrongName.pdsauadni2w1hwlsyjmz1pizhzdilmes

| `-- StrongName.pdsauadni2w1hwlsyjmz1pizhzdilmes

| `-- Files

|-- backup.bacpac

So, my recommendation is to always use an empty directory as the backup output.

This restores the backup to a new database:

1

2

3

4

5

6

7

sqlpackage \

/a:Import \

/tsn:tcp:server_name.database.windows.net,1433 \

/tu:sa \

/tp:str()ng!password \

/tdn:restored_database \

/sf:out/backup.bacpac

Docker container

Now that we have the backup file, it’s time to create a container where it will be restored at startup. This container should be based on the official MSSQL 2019 image

Let’s find out if this image has anything we need for now:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

$ docker run -it --rm -u root mcr.microsoft.com/mssql/server:2019-CU10-ubuntu-16.04 bash

SQL Server 2019 will run as non-root by default.

This container is running as user root.

To learn more visit https://go.microsoft.com/fwlink/?linkid=2099216.

root@96a8b1736184:/# sqlpackage

bash: sqlpackage: command not found

root@96a8b1736184:/# apt-get -qq update && apt-get -qq install tree -y

debconf: delaying package configuration, since apt-utils is not installed

Selecting previously unselected package tree.

(Reading database ... 9990 files and directories currently installed.)

Preparing to unpack .../tree_1.7.0-3_amd64.deb ...

Unpacking tree (1.7.0-3) ...

Setting up tree (1.7.0-3) ...

root@96a8b1736184:/# cd /opt/

root@96a8b1736184:/opt# tree . | grep sqlpackage

Uhm, no sqlpackage binary in the official image, and according to this issue there is no plan to add it soon. The only way is to create a base image on top of it. Let’s do it!

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

FROM mcr.microsoft.com/mssql/server:2019-CU10-ubuntu-16.04

LABEL mantainer="Jose Truyol"

USER root

RUN apt-get update \

&& apt-get install -y unzip \

&& wget -q -O sqlpackage.zip https://go.microsoft.com/fwlink/?linkid=2134311 \

&& unzip -qq sqlpackage.zip -d /opt/sqlpackage \

&& chmod +x /opt/sqlpackage/sqlpackage \

&& apt-get remove --purge unzip -y \

&& rm sqlpackage.zip

ENV PATH=$PATH:/opt/mssql-tools/bin:/opt/sqlpackage

ENV ACCEPT_EULA=Y

ENV MSSQL_PID=Express

USER mssql

Using this image, let’s test sqlpackage

We need to use the

rootuser because themssqluser has no permission to create files or directories.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

$ docker run -it -u root --rm sql.backup bash

SQL Server 2019 will run as non-root by default.

This container is running as user mssql.

To learn more visit https://go.microsoft.com/fwlink/?linkid=2099216.

root@7d27d4b34121:/$ sqlpackage

SqlPackage: Command-line tool for creating and deploying SQL Server databases and DACPAC packages.

Copyright (c) 2016 Microsoft Corporation. All rights reserved.

Help for dynamic property usage.

/@<file>:<string>

Read response file for more options.

/help:[True|False]

(short form /?)

.....

root@7d27d4b34121:/# mkdir backup

root@7d27d4b34121:/# sqlpackage /a:Export \

> /ssn:tcp:server_name.database.windows.net,1433 \

> /su:sa \

> /sp:"str()ng!password" \

> /sdn:database_name \

> /tf:backup/backup.bacpac

Connecting to database 'database_name' on server 'tcp:server_name.database.windows.net,1433'.

Extracting schema

Extracting schema from database

Resolving references in schema model

Validating schema model

Validating schema model for data package

Validating schema

Exporting data from database

Exporting data

Processing Export.

Processing Tables

Successfully exported database and saved it to file '/backup/backup.bacpac'.

Time elapsed 00:00:47.86

root@7d27d4b34121:/#

Now that we have a way to do backups using a docker container it’s time to restore it to a new server. In this case, the new server will be the same container where we do our backup.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

FROM mcr.microsoft.com/mssql/server:2019-CU10-ubuntu-16.04

LABEL mantainer="Jose Truyol <[email protected]>"

USER root

RUN apt-get update \

&& apt-get install -y unzip \

&& wget -q -O sqlpackage.zip https://go.microsoft.com/fwlink/?linkid=2134311 \

&& unzip -qq sqlpackage.zip -d /opt/sqlpackage \

&& chmod +x /opt/sqlpackage/sqlpackage \

&& apt-get remove --purge unzip -y \

&& rm sqlpackage.zip

ENV PATH=$PATH:/opt/mssql-tools/bin:/opt/sqlpackage

ENV ACCEPT_EULA=Y

ENV MSSQL_PID=Express

ENV SA_PASSWORD="!test.Passw0rd"

COPY ./scripts/ /scripts

RUN bash /scripts/backup.sh

USER mssql

CMD [ "/scripts/entrypoint.sh" ]

The backup.sh file content is:

1

2

3

4

5

6

7

8

#!/bin/bash

sqlpackage /a:Export \

/ssn:tcp:server_name.database.windows.net,1433 \

/su:sa \

/sp:"str()ng!password" \

/sdn:database_name \

/tf:backup/backup.bacpac

The default CMD is replaced with the entrypoint.sh file:

1

2

3

#!/bin/bash

/scripts/restore.sh & /opt/mssql/bin/sqlservr

There should be a non-terminating process to keep the container alive. Based on this issue

The /opt/mssql/bin/sqlservr part was found using:

1

2

3

4

$ docker inspect mcr.microsoft.com/mssql/server:2019-CU10-ubuntu-16.04 | jq -r '.[0].Config.Cmd'

[

"/opt/mssql/bin/sqlservr"

]`

The restore.sh file contains:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

#!/bin/bash

# WAITING LOGIC BASED ON https://github.com/microsoft/mssql-docker/blob/master/linux/preview/examples/mssql-customize/configure-db.sh

DBSTATUS=1

ERRCODE=1

i=0

MAX_ITERATIONS=60

while [[ $DBSTATUS -ne 0 ]] && [[ $i -lt $MAX_ITERATIONS ]] || [[ $ERRCODE -ne 0 ]]; do

i=$i+1

DBSTATUS=$(/opt/mssql-tools/bin/sqlcmd -h -1 -t 1 -U sa -P $SA_PASSWORD -Q "SET NOCOUNT ON; SELECT SUM(state) FROM sys.databases")

ERRCODE=$?

sleep 1s

done

if [[ $DBSTATUS -ne 0 ]] || [[ $ERRCODE -ne 0 ]]; then

echo "SQL Server took more than $MAX_ITERATIONS seconds to start up or one or more databases are not in an ONLINE state"

exit 1

fi

sqlpackage \

/a:Import \

/tsn:localhost \

/tdn:restored_db \

/tu:sa \

/tp:$SA_PASSWORD \

/sf:backup/backup.bacpac

Before any restore attempts, the script will execute a query against the database. While this command returns any error we can assume that the database isn’t ready. After 60 iterations, if there is still any error the script exit with an error. This allows us to restore the database as soon as it’s ready and not at an arbitrary waiting time using any sleep command.

Let’s build and run this container:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

$ docker build -t sql.backup .

Sending build context to Docker daemon 8.192kB

Step 1/13 : FROM mcr.microsoft.com/mssql/server:2019-CU10-ubuntu-16.04

......

Successfully tagged sql.backup:latest

$ docker run -it --rm sql.backup

SQL Server 2019 will run as non-root by default.

This container is running as user mssql.

To learn more visit https://go.microsoft.com/fwlink/?linkid=2099216.

2021-05-12 21:01:18.15 Server The licensing PID was successfully processed. The new edition is [Express Edition].

......

*** Error importing database:Could not import package.

Error SQL72014: Core Microsoft SqlClient Data Provider: Msg 12824, Level 16, State 1, Line 5 The sp_configure value 'contained database authentication' must be set to 1 in order to alter a contained database. You may need to use RECONFIGURE to set the value_in_use.

Error SQL72045: Script execution error. The executed script:

IF EXISTS (SELECT 1

FROM [master].[dbo].[sysdatabases]

WHERE [name] = N'$(DatabaseName)')

BEGIN

ALTER DATABASE [$(DatabaseName)]

SET FILESTREAM(NON_TRANSACTED_ACCESS = OFF),

CONTAINMENT = PARTIAL

WITH ROLLBACK IMMEDIATE;

END

Error SQL72014: Core Microsoft SqlClient Data Provider: Msg 5069, Level 16, State 1, Line 5 ALTER DATABASE statement failed.

Error SQL72045: Script execution error. The executed script:

IF EXISTS (SELECT 1

FROM [master].[dbo].[sysdatabases]

WHERE [name] = N'$(DatabaseName)')

BEGIN

ALTER DATABASE [$(DatabaseName)]

SET FILESTREAM(NON_TRANSACTED_ACCESS = OFF),

CONTAINMENT = PARTIAL

WITH ROLLBACK IMMEDIATE;

END

Based on the documentation we can fix this with a simple SQL query before any database restore. The modified restore.sh is:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

#!/bin/bash

# WAITING LOGIC BASED ON https://github.com/microsoft/mssql-docker/blob/master/linux/preview/examples/mssql-customize/configure-db.sh

DBSTATUS=1

ERRCODE=1

i=0

MAX_ITERATIONS=60

while [[ $DBSTATUS -ne 0 ]] && [[ $i -lt $MAX_ITERATIONS ]] || [[ $ERRCODE -ne 0 ]]; do

i=$i+1

DBSTATUS=$(/opt/mssql-tools/bin/sqlcmd -h -1 -t 1 -U sa -P $SA_PASSWORD -Q "SET NOCOUNT ON; SELECT SUM(state) FROM sys.databases")

ERRCODE=$?

sleep 1s

done

if [[ $DBSTATUS -ne 0 ]] || [[ $ERRCODE -ne 0 ]]; then

echo "SQL Server took more than $MAX_ITERATIONS seconds to start up or one or more databases are not in an ONLINE state"

exit 1

fi

sqlcmd -S localhost -P $SA_PASSWORD -U sa -Q "$(cat <<-EOF

sp_configure 'contained database authentication', 1;

GO

RECONFIGURE;

GO

EOF

)"

sqlpackage \

/a:Import \

/tsn:localhost \

/tdn:restored_db \

/tu:sa \

/tp:$SA_PASSWORD \

/sf:backup/backup.bacpac

Let’s try it again:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

$ docker build -t sql.backup .

Sending build context to Docker daemon 8.192kB

Step 1/13 : FROM mcr.microsoft.com/mssql/server:2019-CU10-ubuntu-16.04

......

Successfully tagged sql.backup:latest

$ docker run -it --rm -p 1434:1433 sql.backup

SQL Server 2019 will run as non-root by default.

This container is running as user mssql.

To learn more visit https://go.microsoft.com/fwlink/?linkid=2099216.

......

Successfully imported database.

Time elapsed 00:00:26.18

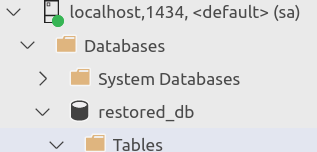

Excellent! At this point, we have a docker container with our Azure Database backup restored in it.

Backup automation

Using our GitLab instance, the go-to way to do this automation is using the CI/CD pipeline feature

I’m used to dividing my pipeline definition between multiple files inside the .gitlab/ci directory. In this case, I need a few files:

1

2

3

4

5

6

7

8

9

10

11

12

$ tree . -a

.

├── Dockerfile

├── .gitlab

│ ├── ci

│ │ ├── backup.gitlab-ci.yml

│ │ └── global.gitlab-ci.yml

│ └── scripts

│ ├── backup.sh

│ ├── entrypoint.sh

│ └── restore.sh

├── .gitlab-ci.yml

Let’s go into each one of them:

.gitlab-ci.yml

1

2

3

4

5

6

7

8

9

10

variables:

DOCKER_VERSION: 19.03.15

GIT_SUBMODULE_STRATEGY: none

stages:

- backup

include:

- local: .gitlab/ci/global.gitlab-ci.yml

- local: .gitlab/ci/backup.gitlab-ci.yml

Here are defined global variables, in this case, the dind (Docker in Docker) version to use as well as the submodule strategy.

Next are the stages and the include statements with the other parts of our pipeline definition.

global.gitlab-ci.yml

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

.default-retry:

retry:

max: 2 # This is confusing but this means "3 runs at max".

when:

- unknown_failure

- api_failure

- runner_system_failure

- job_execution_timeout

- stuck_or_timeout_failure

.use-docker-in-docker:

image: docker:${DOCKER_VERSION}-dind

services:

- docker:${DOCKER_VERSION}-dind

variables:

DOCKER_DRIVER: overlay2

DOCKER_TLS_CERTDIR: ""

This file contains some little templates that we can use in any job. default-retry allows us to run the job up to 3 times if there any failure unrelated to our files. use-docker-in-docker will allow us to use docker in docker in any job that extends it.

backup.gitlab-ci.yml

1

2

3

4

5

6

7

8

9

10

db_backup_image:

extends:

- .default-retry

- .use-docker-in-docker

- .backup:rules:db_backup_image

stage: backup

script:

- echo "$CI_REGISTRY_PASSWORD" | docker login -u "$CI_REGISTRY_USER" --password-stdin $CI_REGISTRY

- docker build -t $CI_REGISTRY_IMAGE:latest .

- docker push $CI_REGISTRY_IMAGE:${DB_NAME}

This is the main job of the pipeline. It will log in against our docker registry (also GitLab), build our image, and push it.

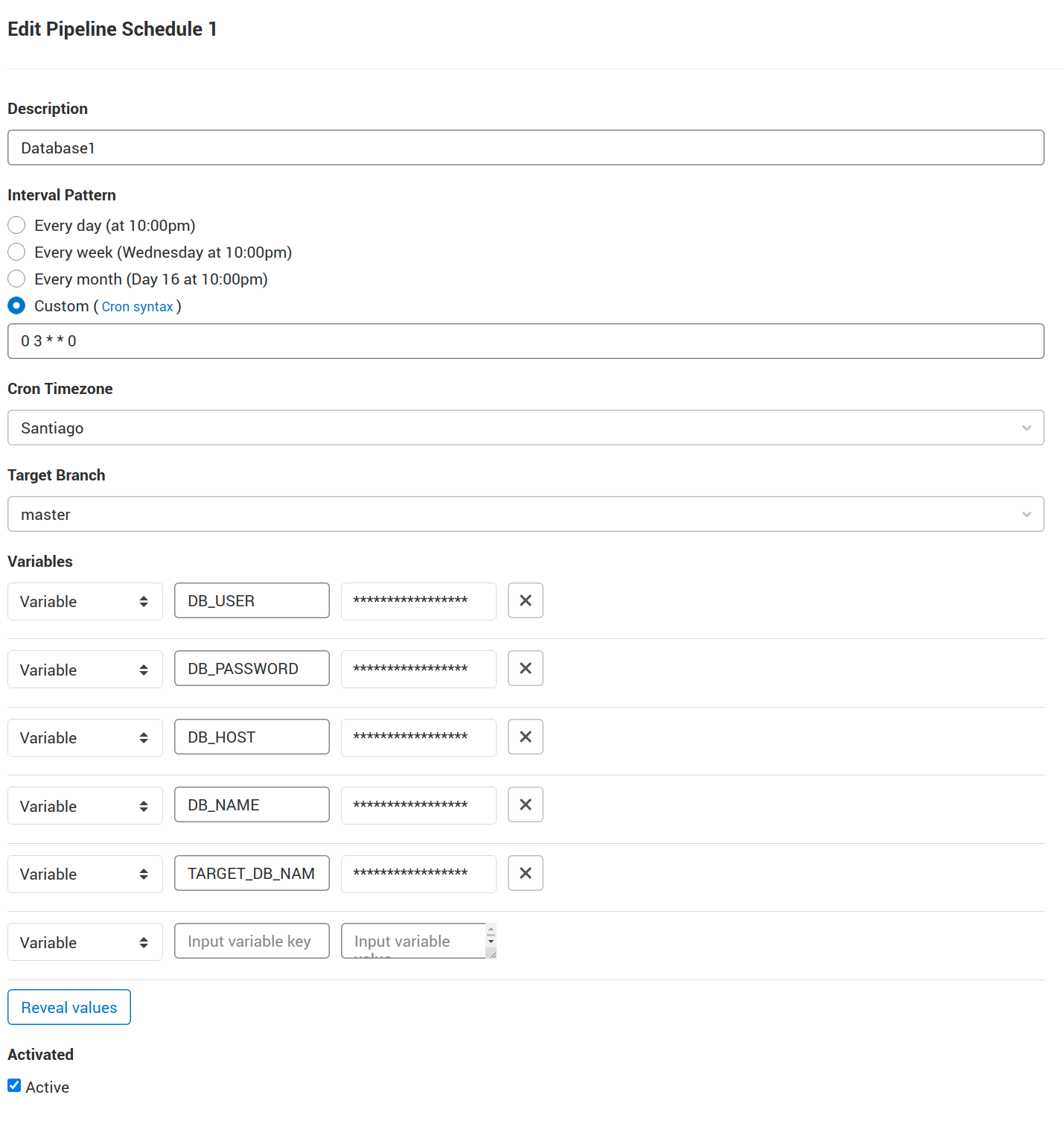

Scheduling

Gitlab offers Pipeline Scheduling. This will allow us to schedule multiple pipeline execution, with different variable values. So the first step is to prepare our code to be aware of these variables.

The variables are

| Name | Description |

|---|---|

| DB_HOST | Source database host with the format tcp:<server>,<port> |

| DB_USER | Source database user |

| DB_PASSWORD | Source database password |

| DB_NAME | Source database name |

| TARGET_DB_NAME | Target database name |

First, the backup.sh script:

1

2

3

4

5

6

7

8

9

10

11

#!/bin/bash

rm -r -f backup/

mkdir -p backup/

sqlpackage /a:Export \

/ssn:$DB_HOST \

/su:$DB_USER \

/sp:$DB_PASSWORD \

/sdn:$DB_NAME \

/tf:backup/backup.bacpac

Next, the restore.sh script:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

#!/bin/bash

# WAITING LOGIC BASED ON https://github.com/microsoft/mssql-docker/blob/master/linux/preview/examples/mssql-customize/configure-db.sh

DBSTATUS=1

ERRCODE=1

i=0

MAX_ITERATIONS=60

while [[ $DBSTATUS -ne 0 ]] && [[ $i -lt $MAX_ITERATIONS ]] || [[ $ERRCODE -ne 0 ]]; do

i=$i+1

DBSTATUS=$(/opt/mssql-tools/bin/sqlcmd -h -1 -t 1 -U sa -P $SA_PASSWORD -Q "SET NOCOUNT ON; SELECT SUM(state) FROM sys.databases")

ERRCODE=$?

sleep 1s

done

if [[ $DBSTATUS -ne 0 ]] || [[ $ERRCODE -ne 0 ]]; then

echo "SQL Server took more than $MAX_ITERATIONS seconds to start up or one or more databases are not in an ONLINE state"

exit 1

fi

sqlcmd -S localhost -P $SA_PASSWORD -U sa -Q "$(cat <<-EOF

sp_configure 'contained database authentication', 1;

GO

RECONFIGURE;

GO

EOF

)"

sqlpackage \

/a:Import \

/tsn:localhost \

/tdn:$TARGET_DB_NAME \

/tu:sa \

/tp:$SA_PASSWORD \

/sf:backup/backup.bacpac

To update the Dockerfile we just need to add:

1

2

3

4

5

6

ARG DB_HOST

ARG DB_USER

ARG DB_PASSWORD

ARG DB_NAME

ARG TARGET_DB_NAME

ENV TARGET_DB_NAME=${TARGET_DB_NAME}

These variables need to be passed to the build process, so we modify the backup.gitlab-ci.yml:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

db_backup_image:

extends:

- .default-retry

- .use-docker-in-docker

- .backup:rules:db_backup_image

stage: backup

script:

- echo "$CI_REGISTRY_PASSWORD" | docker login -u "$CI_REGISTRY_USER" --password-stdin $CI_REGISTRY

- |

docker build \

--build-arg DB_HOST=${DB_HOST} \

--build-arg DB_USER=${DB_USER} \

--build-arg DB_PASSWORD=${DB_PASSWORD} \

--build-arg DB_NAME=${DB_NAME} \

--build-arg TARGET_DB_NAME=${TARGET_DB_NAME} \

-t $CI_REGISTRY_IMAGE:${DB_NAME} .

- docker push $CI_REGISTRY_IMAGE:${DB_NAME}

rules:

- if: $CI_PIPELINE_SOURCE == "schedule"

The rule

if: $CI_PIPELINE_SOURCE == "schedule"will allow GitLab to run this job only when a pipeline is triggered using the scheduling feature.

Now we can program as many database backups as we need it.